A Brief History of the Data Centre

Part of our CEO Series of articles aimed at business people who need to know more about technology.

Data Centres are currently receiving a lot of negative press. They are viewed as outdated and unnecessary by many companies who are now embracing the Cloud as the answer to their technology infrastructure requirements. (See An Introduction to Cloud Computing for more information on the Cloud). At 17 Ways we believe that Data Centres will continue to be a core component of corporate IT for many years to come and that it is essential that any medium to large scale company has a cohesive strategy around Data Centre evolution. To understand why we take this view, we would like to take you on a quick history tour of the Data Centre and to look at its role within the corporate world.

In Cloud vs Converged we compare cloud computing to the traditional Data Centre technology described here.

What Does a Data Centre Do?

A Data Centre is just a specialised room for storing computer equipment. The room itself is only a small part of the cost with the equipment and the management costs making up the bulk of the expense of running a Data Centre. Data Centres are needed to protect a companies' critical IT infrastructure (servers and network equipment) from irregularity in temperature or power and from accidental and unintended interference by humans.

Personal computers have never had that kind of treatment and have had to cope in the real world and we will talk more about them in a while, but server technology traditionally lives in a Data Centre. When we say server technology we are referring to the computers that run the backend processing for applications. When you run an application on your PC at your desk, typically it will connect to a number of servers in a data centre. These servers are running things like databases (which keep all of the critical data), processing (components that updates that data and produces reports for example), and security services (to control who can access what data).

The Data Centre is the Fort Knox of the technology department. It is where all of the critical computers and networking equipment are kept.

The 90's Data Centre

By the 90’s the Data Centre was a large room with a diverse range of equipment from different vendors. Data Centres were (and still are) expensive to run. They need a constant temperature, backup power supplies and secured access. Systems from different vendors didn’t generally interoperate, leading to duplication and “islands of technology”. So if you had a spare component like a CD-ROM or a memory chip then you couldn't use that in any of the other equipment in your Data Centre.

Within a Data Centre the different computers lived in specialised racks with whole rows of racks being dedicated to different vendor’s technologies. There was little standardisation and vendors typically built most of their hardware themselves rather than using other people’s parts. While this led to healthy competition, most of the hardware arms race was only delivering small, incremental change.

The real revolutionary change was happening outside the Data Centre.

X86 and Open Source

The brain of a computer is the Central Processing Unit (CPU). During the 90’s hardware vendors such as Sun, IBM and HP were building there own CPUs while PC manufacturers were using the Intel x86 chips (or clones from companies such as AMD). It is a lot cheaper to buy a CPU than to build one and the volume of PC sales drove down the production costs. This meant that PCs became very much cheaper alternatives to traditional Data Centre hardware, and throughout the 90’s another island of technology was growing inside the Data Centre as more serious systems were being developed for PCs. The PC Server became a reality, despite being an oxymoron.

Standardising on x86 CPUs allowed software to run on more machines.

At the same time a movement called Open Source was gaining momentum. Each different type of CPU required different software to be developed to run on it. However with the increase in the use of x86 processors there were suddenly a lot of different computers that could run the same software.

If you spent a lot of time developing software then you typically wanted to keep that private to protect your IP, however a lot of people were realising that there was more P than I in the software that they were developing to do non-core activities. For example, if you are an insurance company then you care a lot about your claims handling systems but you don’t really care about the software that you use to book meeting rooms. Rather than build this stuff from scratch within each organisation or buy that software from a vendor, the alternative was for everyone to share it. The technology industry which underpins banks and multi-nationals might seem like a strange place for socialism to start but that is exactly what happened. Starting with low-level things like operating systems it spread quickly with people seeing a huge benefit in using Open Source software that anyone can use and update, but nobody owns.

We can't over emphasise what a difference Open Source has made to the IT Industry and the largest impacts have been seen inside the Data Centre. The development of cheap PCs put high powered computers in the hands of everyone and Open Source allowed anyone with the time and the inclination to learn, to become a software developer and to contribute their code to the Open Source world.

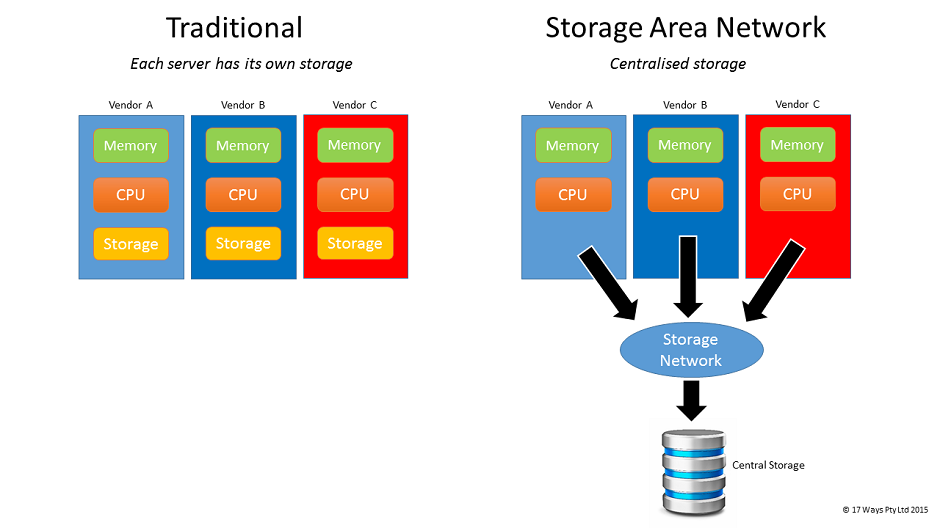

Storage Networks

Put all of your eggs in a bombproof basket and guard it with your life

Meanwhile back in the Data Centre of the 90’s and early noughties the number of servers continued to grow. These systems were not Open Source and most of them were running their own CPUs. About the only thing that the manufactures of these computers agreed on was disk technology. This is the medium that they use to store information, similar to the storage in a laptop or iPhone.

Because this stuff was low level and not a big differentiator, the hardware vendors were more influenced by standards and Open Source, which meant that this technology could actually be shared. And if it can be shared then it doesn’t even need to be inside the same box. This led to the emergence of companies selling Data Centre storage systems. The hard drives effectively disappeared from the servers and became shared, with the data physically living on a new box in the corner of the Data Centre. Not only did this reduce cost by pooling all of the space requirements but it helped with disaster recovery as now only one system in the Data Centre needed to worry about copying data somewhere else instead of every system doing its own thing.

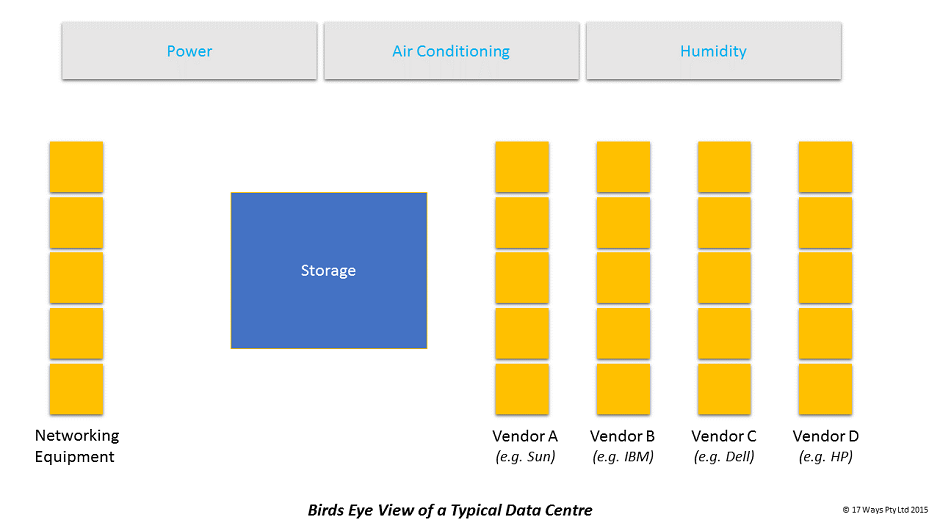

Data Centre Tour

This is a good point to stop and have a look at what was in a typical Data Centre a few years ago.

You enter a Data Centre through a security check. Nobody gets in without a valid reason and appropriate approvals. Once inside you will find it incredibly noisy. Most of this is due to fans inside the computers and air conditioning. At one end of the large room there will be some big white boxes. These are the air conditioners and power management systems. They make sure that the humidity, temperature and power remain constant.

The floor you stand on is raised. Underneath you are literally kilometres of cables connecting everything together. Probably in the middle of the room are a number of huge metal boxes. These are the storage systems. The rest of the room is full of computer racks, mostly identical. Down one side of the room are a series of racks labeled Networks and covering the rest of the room are racks containing computers with the name of the technology/team that looks after them.

Outside the Data Centre the technology teams are typically organized by technology and vendor. A separate team looks after each type of technology present in the Data Centre. This is partly for convenience but also a function of the high level of technical skill needed to understand the different technology offerings.

One of the largest costs of the traditional Data Centre model is the need to run separate support teams for each of the technologies.

Aside: Data Centre Proliferation and Outsourcing

Up until now we have described a single Data Centre. In fact one of the issues that many companies face is a proliferation of Data Centres distributed around the world leading to multiplication of many of the costs. There is limited scale in having multiple Data Centres and issues like security, power and air conditioning need to be solved repeatedly in each new location with a different set of local providers and issues.

Even if you don't have a global problem there is still limited appeal in running your own Data Centre premises. Typically a Data Centre would be located in the same building as the staff, occupying expensive real estate and often located in the basement and at risk of flooding. The systems and people that you need to operate the Data Centre physical environment are above and beyond what you need for the rest of the building, so again there is no real scale factor in running your own Data Centre.

The answer to both of these problems is the same. Use a specialist Data Centre company to run your Data Centre space for you. They have the economy of scale and you can focus on the machines inside the Data Centre and not worry about the room itself. It can also be located away from your staff which is better for Disaster Recovery and cheaper. Many organisations use Data Centre providers to host their servers with the providers giving them a space in a large Data Centre with guaranteed power, humidity and temperature. An extra benefit is that often the Data Centre will also host some of the other companies that you need to connect to making it faster and easier to do business. Interestingly these are often the same physical Data Centres that the Cloud providers use, meaning that whether you run something inside your own Data Centre or in the Cloud, it can actually be running in the same room.

Whether you outsource your Data Centre facilities or run them yourself, the same challenges apply to the servers that are in them.

Standardisation

In our Data Centre we noticed something strange happening throughout the start of the 21st century. More and more hardware vendors were adopting x86 CPUs and standardising on Microsoft’s Windows operating system or the Open Source Linux operating system. Even Apple discarded their own chips to use Intel’s x86 and an Open Source operating system very similar to Linux. The racks in the Data Centres were still full of different vendors hardware but under the covers these computers were very similar. The battle between the hardware vendors had moved away from the hardware itself and into how easy they can make it for you to run that hardware.

By 2010 the hardware arms race was over. Bigger, Faster, Better had given way to Easier and Cheaper.

Virtualisation

As PC Servers developed, they got more and more powerful. These computers are not the simple PC that you have on your desk, they have many times the performance and capacity. The problem was that it wasn’t always possibly to harness all the compute power. Many of the operating systems of the time, such as Microsoft Windows were originally only intended for use on personal computers. As such they were not particularly good at running multiple things at the same time. This led to a proliferation of 'single-use' servers in the Data Centre running things like Email, File Sharing and security services. These use cases resulted in many of these machines for the most part being idle.

The goal was then to somehow harness this unused capacity. It was only a matter of time before virtualisation became possible and offered a very effective way of making optimal use of computing resources. Ignoring the noise from mainframe people shouting “We did that 50 years ago!” the PC Server software vendors re-invented the ability to run a number of “virtual machines” on a single physical machine. Instead of having several racks full of computers you could now have one computer that to everyone outside the Data Centre, looked like it was lots of computers.

Virtualisation is very important because it moves IT infrastructure away from the need to scale by adding boxes. Now you can scale your systems up or down without anyone needing to physically touch them (provided you have capacity). This reduces time to market and requires no long term commitment.

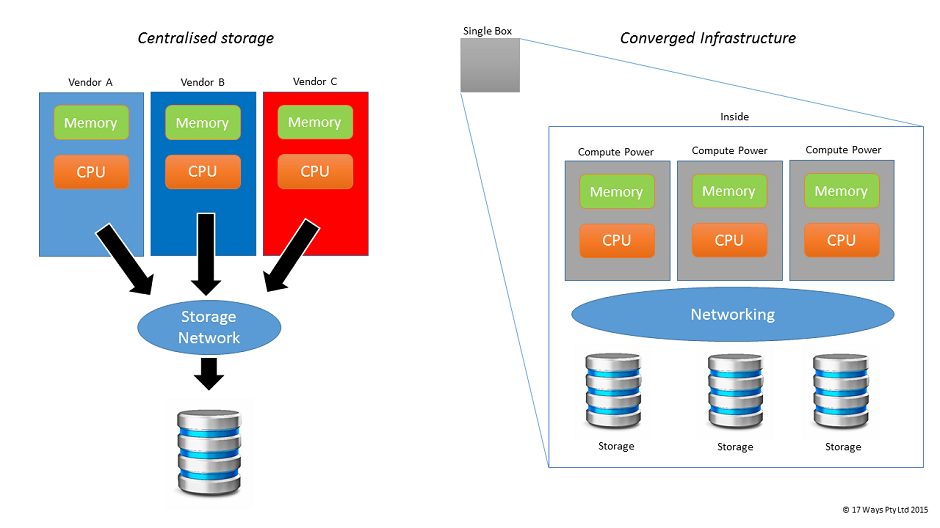

Converged Infrastructure

At the start of our story the Data Centre consisted of lots of completely different things from different vendors right through the technology stack. With the separation of storage from computing, we saw the development of storage area networks and standardisation across that technology. With computing, many vendors moved towards using x86 CPUs, which led to commoditisation and standardisation in this space. We also have virtualisation so we have less servers. The data sits within the Storage Area Network (SAN) and the (virtual) server runs on the compute platform. They are both connected over a network (all those cables within a rack, under the floor and some clever network devices). The problem now is the physical amount of interconnectivity required under this model. With each physical server generally needing 5 or 6 connections – redundant network connections, redundant SAN and possibly redundant out-of-band management, that adds up to a lot of physical cables, switch ports and licence costs. The complexity and cost has driven a new approach, which is to bring all these components together into one cohesive system. This approach has been labelled Converged Infrastructure.

The next step in convergence is to put all of the equipment into a single physical box. This can be upgraded to add more storage or compute power and is faster because all the communication is internal and doesn't need to cross the cables under the floor.

Converged Infrastructure takes the compute power of the x86 servers, the network technology from those clever boxes (but much more simplified and optimised) and the storage and it puts them altogether along with the virtualisation technology and some software to manage it all. The mainframe guys think it is the 1960’s again but to everyone else it is new and it represents a massive step forward.

Again, if you are outside the Data Centre you can’t really tell the difference, except adding new servers or disk space is now incredibly fast and your costs for running the Data Centre are less.

The emergence of Converged Infrastructure is quite recent and very significant. It allows much more of the traditional infrastructure work to be done with software rather than hardware. Now you can add a server or extra disk space or memory with the click of a button. This is every Data Centre Manager's dream. Now you hardly ever need to do work inside the Data Centre which greatly reduces the chance of mistakes being made. Implementing Converged Infrastructure also comes with an organisational change aspect which is often overlooked. If you remember before, we had multiple teams running the technologies; as a minimum there was a network team, a storage team and a server team. Now everything is in a single box with a single set of management tools and it is important for any organisation that implements Converged Hardware to also change their operating structure to match the new approach.

Converged Infrastructure leads to the Software-Defined Data Centre - the ability to make changes to your Data Centre without physically touching any hardware.

You may also come across the term Hyper-Converged. This is similar to converged in that compute, network and storage components are physically brought together, and that these are managed by specific management software. The difference is the way the physical network, compute and storage components are brought together.

With converged the technology components are physically grouped together. As such there is chassis containing compute blades, a chassis containing hard drives and associated storage infrastructure, and a chassis containing network components. In some instances there is also a chassis containing the management hardware components.

With Hyper-converged, the technology components are physically brought together on the one physical unit / element. In other words Hyper-converged tends to be based on a physical compute blade that contains physical storage and network components. It may also contain additional hardware components housed on the blade to perform functions such as data compression or de-duplication.

The way converged and hyper-converged systems are built and expanded also differ. With converged, the systems are built using blocks of storage and computing. Expansion can usually be effected by adding blades of compute or disks to storage if capacity is available. If space is not available another chassis or ‘block’ is then added (either compute or storage) and the blades or disks are then installed as appropriate. With converged, the platform is built by bringing together a number of units/elements together. Expansion is effected by simply adding another unit.

Conclusion

We have seen the Data Centre evolve over time from a collection of very different hardware components from different manufacturers through to sharing of technologies and the adoption of x86 and Open Source, and finally to Converged Infrastructure with virtualisation and the Software-Defined Data Centre.

This is very significant change and much of it is still new and developing. Meanwhile Cloud Computing has been making major inroads to the areas where traditional Data Centre technology used to rule. Is Converged Infrastructure enough to save the Data Centre from extinction? When should you use Cloud and when should you use Converged? In Cloud vs Converged we go through the comparison and try to help you to make the right decisions. If you want some background on what Cloud Computing is you can also read our article An Introduction to Cloud Computing.